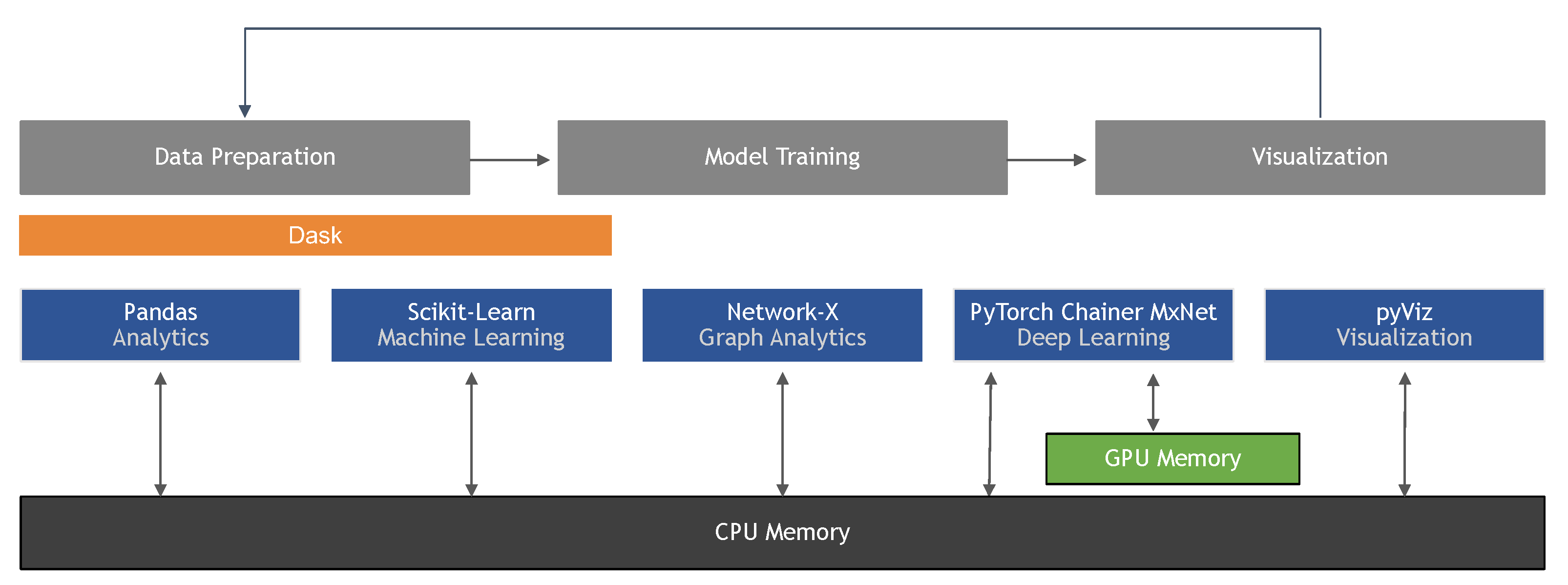

Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence

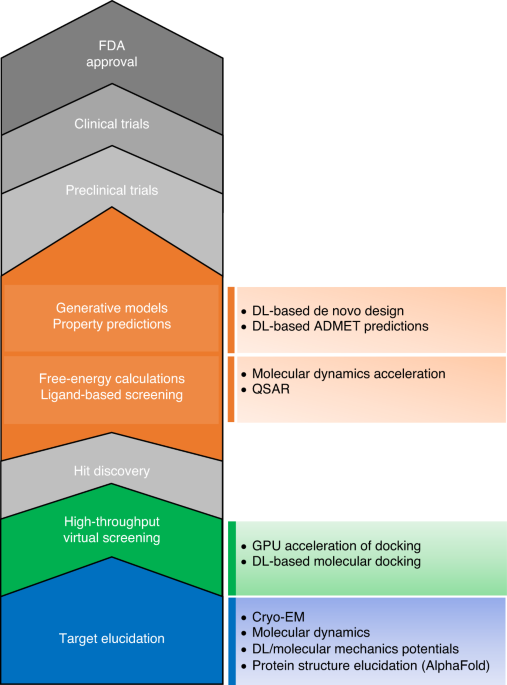

The transformational role of GPU computing and deep learning in drug discovery | Nature Machine Intelligence

![Deep Learning 101: Introduction [Pros, Cons & Uses] Deep Learning 101: Introduction [Pros, Cons & Uses]](https://assets-global.website-files.com/5d7b77b063a9066d83e1209c/6179f47da28ef9ef0c9b0894_1B_dlL2L4wk1wE6QKqxJWsH1RONOwFL1VZ1QXHnOCEjE357KYPndhytAgD-Vw7ZOpAITQhyNhGrM7SpRWPxpi7rPM_bGDDMUXwnB17NiIAoRlw1Q5DbMqW_DSWTzAwP-wCBheBSO.jpeg)